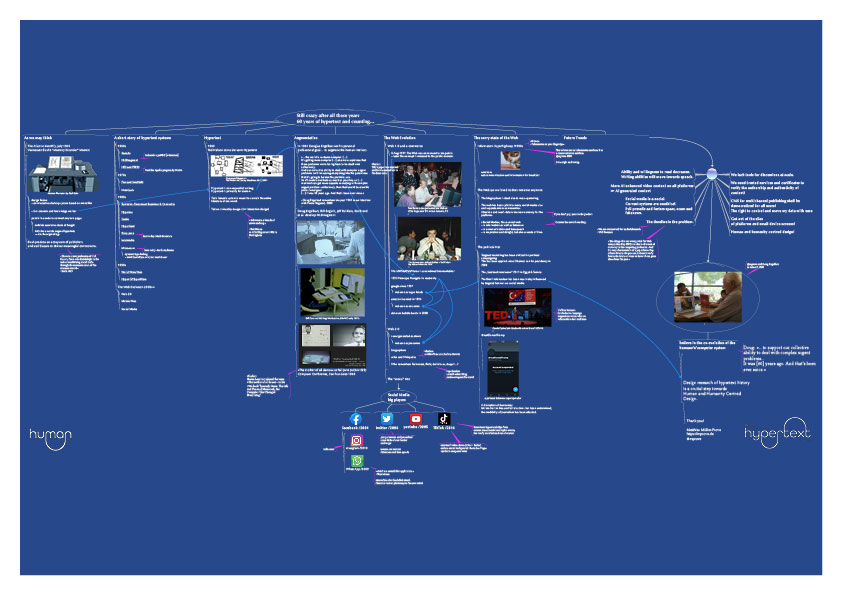

Still Crazy After All These Years.

60 Years of Hypertext and Counting…

Keynote @ Workshop HUMAN’21 at ACM Hypertext 2021

Keynote at HUMAN’21 – 4th Workshop on Human Factors in Hypertext

This year marks the 30th anniversary of the World Wide Web. But the notion of hypertext as a form of non-sequential writing dates back to the early 1960s. Hypertext and hypermedia systems as useful tools for creative thought have almost been forgotten along with several other flavors of pioneering systems.

Even the web went through several stages from a cross-platform information medium, to e-commerce, to Web 2.0, to the mobile web, to the so-called Social Networks. Today, several trending online platforms are successful despite the fact that they are mobile-only and even app-only without providing decent cross-linking mechanisms to content on other servers. According to business goals it seems to be mandatory to make users spend as much time as possible on their own pages and let them swipe through endless content streams.

The keynote will present some of the lost cyberspaces to revive the original motivation for future development. The urgent and complex problems of the world can only be tackled collectively with a powerful, and connected online medium for personal benefit and collective commons.

2 Pages

DOI: https://doi.org/10.1145/3468143.3483928

1 Hypertext

Vannevar Bush’s Memex [1] –derived from memory extender– is a conceptual prototype to support the work of scientists. The proposed interaction with the system is remarkable and still visionary as not all proposed features have been adopted by today’s personal and connected computers. The concept of trails to create a sequence of pages according to an associative chain of thoughts is considered to be the first sketch of hyperlinks. In fact they resemble much more the idea of hashtags on social media platforms. Since 1945 Bush’s Memex stays design fiction.

The term Hypertext was coined by Theodor Holm Nelson in the early 1960s. Hypertext is a form of non-sequential writing; to authors it offers the ease to arrange text blocks on screen like real cut & paste with scissors and glue in the analog paper world.

1.1 Augmentation

In 1961 Douglas C. Engelbart set the agenda for his professional career. As he recalls in an interview with Frode Hegland [2]:

[…] the world is so damn complex […] It’s getting more complex […] at a more rapid rate that these problems we’re facing have to be dealt with collectively.

And our collective ability to deal with complex urgent problems isn’t increasing at anything like the parent rate that it’s going to be that the problems are.

So if I could contribute as much as possible, so […] mankind can get more capable in dealing with complex urgent problems collectively, then that would be a terrific professional goal.

At Fall Joined Computer Conference FJCC ’68 Doug Engelbart and his team presented NLS/Augment live on stage; a working system to support teams with interactive text editing, hyperlinking, outlining, video conferencing between San Francisco and Menlo Park, and new input devices like the mouse and the chording keyset. A year later, in 1969, the Stanford Research Institute - Augmentation Research Center (SRI-ARC) became the second node of the ARPAnet.

All hypertext systems of the 1960s, 70s and 80s are discussed at [3].

2 The Web Evolution

Meanwhile the World Wide Web itself is 30 years old. It was presented by Tim Berners-Lee and Robert Cailliau at the demo area of ACM Hypertext ’91. The Web took a pragmatic approach to hypertext by ignoring advanced features like for instance bi-directional linking or a central link database. Furthermore, it was released to the public domain – hence everyone was allowed to use the technology without license costs and to develop their own applications. Netscape Navigator was one of those browsers which omitted the capability of editing Web pages for the sake of being fast on the market. The read/write Web of Berners-Lee and Cailliau grew into a consumer and e-commerce read-only Web.

2.1 Web 2.0

This changed during the first decade of the new millennium. The phase of the Web 2.0 introduced blogs and wikis to enable people to publish and edit dynamic pages. The paradigm shift from classical media to user generated content shows the revolution for the industry and the society.

New companies were founded to use the momentum and eventually to monetize the user’s screen time and attention by selling ads. Facebook was founded in 2004, YouTube in 2005, Twitter in 2006, WhatsApp in 2009 and Instagram in 2010. The latter two have been acquired by Facebook Inc. while Twitter and TikTok (since 2016) remain independent.

The social media landscape is divided among very few big players; but even worse, the platforms try to keep the users as long as possible inside their domains by offering endless activity streams and news feeds. Hyperlinks to content hosted by other companies do hardly exist.

2.2 The Political Net

The same algorithms, that are used to identify customers with a high conversion rate when being exposed to ads, can also be used to influence their political opinions. For instance, during the Brexit referendum in 2016 approx. 1 billion banners have been shown to UK voters on Facebook who are categorized as being vulnerable to fear and hate. This in-transparent micro-targeting had a significant effect on the election that led the UK to leave the European Union. [4]

Free speech is a democratic right. The same shall be true for free speech online. But system dynamics of cascading forwarding and retweets has been underestimated. The social media platforms do not offer sufficient tools to control fake news or hate speech that have a poisoning and destructive effect on societies. It is the responsibility of the companies and system designers to review their business models under ethical considerations.

3 Future Trends

The willingness and ability to read (long) articles and posts will decrease. Writing skills will be substituted by speech recognition. The trend of entertainment and emotional and sensational content will continue. We will move from user generated content to A.I. enhanced content or even deep fakes that are entirely driven by artificial intelligence to optimize the individual user’s reactions.

To avoid this dystopia, we need trusted services that verify the authorship and authenticity of posts. In order to break out of the social media silos, we need open and usable CMS (content management systems). The people should control the flow of their personal data. (cf. [5])

We need a human and humanity centered design that supports responsible and ethical design decisions on all levels of platform development and operations.

Compared to 1961, we do not have less »complex urgent problems« on our planet. Online systems should connect people and support discussions and action-oriented solutions. Therefore we should work together on a co-evolution of the human-computer-network system.

REFERENCES

- Vannevar Bush. 1945. As We May Think. In: Interactions 3(2, Mar.) p. 35-46, 1996. Reprinted from The Atlantic Monthly 176 (July 1945). DOI: https://doi.org/10.1145/227181.227186

- Frode Hegeland. 2000. Engelbart Audio Interviews

- Matthias Müller-Prove. 2002. Vision and Reality of Hypertext and Graphical User Interfaces. Report FBI-HH-B-237, University of Hamburg

- Carole Cadwalladr. 2019. Facebook’s role in Brexit – and the thread to democracy. In TED2019

- Tim Berners-Lee et al. 2001. Solid Project

The Slide

Open as PDF :: The little blue balls are active hyperlinks.

References

As we may think

- Bush, Vannevar: As We May Think. In: Interactions 3(2, Mar.) p. 35-46, 1996. Reprinted from The Atlantic Monthly 176 (July 1945)

PDF

A short story of hypertext systems

Hypertext

Software is a branch of movie making. –Ted Nelson at ACM Hypertext 2001 in Nottingham:

Augmentation

»…the world is so damn complex […]

It's getting more complex […] at a more rapid rate that these problems we’re facing have to be dealt with collectively.

And our collective ability to deal with complex urgent problems isn’t increasing at anything like the parent rate that it's going to be that the problems are.

So if I could contribute as much as possible, so […] mankind can get more capable in dealing with complex urgent problems collectively, then that would be a terrific professional goal.

[…] It was 49 years ago. And that's been ever since.«

–Doug Engelbart remembers the year 1961 in an interview with Frode Hegland, 2000

The Web Evolution

The sorry state of the Web

Future Trends

The things that are wrong with the Web today are due to this lack of curiosity in the computing profession. And it’s very characteristic of a pop culture. Pop culture lives in the present; it doesn’t really live in the future or want to know about great ideas from the past.

–Alan Kay 2007

Feedback – please leave a message

hci.social/@mprove or

hci.social/@mprove or  norden.social/@chronohh

norden.social/@chronohh

mprove@acm.org

mprove@acm.org

- More channels